Fairness

Standards for Educational and Psychological Testing

According to the Standards, “fairness is a fundamental validity issue and requires attention throughout all states of test development and use” (p. 49; AERA, APA, & NCME, 2014). It is not surprising then that, although a critical consideration in the process of test development, the concept and term fairness has no specific technical or empirical meaning; instead, it is a conceptual issue to a large extent. Historically, fairness has been conceived largely as an issue of measurement bias. As a result, the issue of fairness was only examined in the context of inherent psychometric problems most often rooted in concerns related to reliability (e.g., item content, item sequence or difficulty, accuracy, and consistency). Such psychometric bias was rarely found and led many to think that this implied no difficulty in the application of a test to any group or subgroup of individuals. However, as the Standards make clear, the central issue of fairness rests on the whole array of considerations throughout test development and use. In this regard, the idea of fairness expands significantly, and a set of standards must be met if a test is to claim that it is not just fair, but fair for all subgroups of test takers. Given that the Ortiz PVAT was designed to measure the vocabulary acquisition of both native English speakers and English learners, fairness was both an overarching design consideration, as well as a fundamental goal for these populations.

Reliability is, of course, a paramount concern in test development; with regard to the Ortiz PVAT, it has already been addressed in detail in the previous sections of this chapter (see Reliability). However, strong reliability evidence alone is not a sufficient indicator of validity or fairness. In addition to a lack of measurement bias, the current edition of the Standards (AERA, APA, & NCME, 2014) outlines specific elements of fairness evidence: cognitive or response processes, and consequences of testing. Response processes refer to considerations taken during the development and administration of the assessment that minimize barriers to valid score interpretations for the widest possible range of individuals and relevant subgroups. Consequences of testing refer to safeguards taken to prevent inappropriate use and interpretation of test scores. The rest of this section describes the manner in which the very design and construction of the Ortiz PVAT incorporated these two elements of fairness from the outset. This section then provides evidence to support the degree to which the Ortiz PVAT demonstrates fairness for both native English speakers and English learners alike, particularly as a function of the development of dual norms. Fairness, as applied to other demographic groups, is addressed directly in a later section of this chapter, Generalizability Across Groups.

Response Processes

Cognitive abilities are latent variables by nature (i.e., unobservable or intangible), and their measurement is thus accomplished by inference. In the case of a receptive vocabulary test, it is presumed that the individual either knows or doesn’t know the meaning of the word being presented. If the examinee knows and recognizes the word, their subsequent action is viewed as an attempt to reveal that knowledge. Given the structure of the Ortiz PVAT, the individual may convey their comprehension of the meaning of the word being presented in several ways (e.g., pointing to the correct picture, using a mouse to click or tapping a touch-enabled screen to select the correct picture, or verbally informing the examiner of the picture that represents their choice). This high degree of accessibility addresses fairness in treatment during the testing process (AERA, APA, & NCME, 2014). The availability of different methods for indicating the examinee’s choice is specifically intended to prevent unintentional inhibition of the desired response. For example, an individual with motor difficulties may not be able to point or click their desired choice, prompting the provision of such accommodations. Providing different input methods does not in any way interfere with the nature of the measurement, as the only relevant response process is the choice being made. Furthermore, during the standardization process, individuals in both normative samples were randomly assigned to use the mouse or the touchscreen (although they were free to switch input methods as needed), such that the data used to standardize the assessment was based on individuals with a variety of input methods.

A bigger issue, however, is the fact that an individual may not actually know the meaning of a word presented and may be able to guess the correct response simply by chance. Such cases would represent a false positive that denotes comprehension of a word when, in fact, the examinee does not actually understand it. To address this issue, several design considerations were made during development of the Ortiz PVAT to ensure that an examinee’s responses are unlikely to be due to pure guessing or luck. By carefully considering the arrangement, orientation, intensity, consistency, coloring, and other aspects of the stimuli, it was possible to create items where the false positive rate was well in line with expectations and requirements. Additionally, investigation of the false positive (i.e., guessing, or c) parameter provided within the IRT model was used to evaluate the results of the pilot phase and to select the final test items. Where such efforts failed to produce items with the appropriate and acceptable false positive rate, the items were either modified, replaced, or dropped altogether (see chapter 6, Development, for more details on the item development and selection process).

Another design consideration was to use a set of Screener items to determine an individual’s starting point on the test. The Screener was developed to ensure that examinees are placed at an appropriate starting point to establish a baseline and initial task comprehension. It also served to limit the items required in the administration; examinees do not need to attempt items beyond the narrow range required to measure their receptive vocabulary acquisition. Because the Screener is so closely correlated with performance on the test, the length of the test can be reduced to just the critical section. Items included in the Screener were selected due their low guessing parameter (see Creation of Screener Items in chapter 6, Development); this approach was taken to reduce the impact of false positives.

A final design consideration to address the issue of guessing was the specification of a ceiling rule to stop the administration of the Ortiz PVAT. This ceiling rule was established by adopting criteria that use statistical probability to determine when an examinee is likely to be simply guessing as opposed to actually knowing the correct response (further details are provided in chapter 6, Development). Specifically, the ceiling rule dynamically evaluates performance across the 10 most recent items administered and terminates testing when it becomes likely that responding has become random rather than intentional. As such, guessing or random selection is unlikely to have a substantially influential role in determining test performance, as the test will cease when it is clear that it has exceeded the examinee’s ability. The ability to discern between the true knowledge possessed by the individual versus spurious responses that reflect a false positive is a powerful example of fairness evidence regarding treatment during the testing process and regarding associated cognitive processes for the Ortiz PVAT.

Consequences of Testing

One of the main goals of developing the Ortiz PVAT was to create a test that directly addresses one of the most problematic consequences of testing that occurs with English learners—failure to account for how differences in language development and proficiency (as compared to native English speakers) attenuates performance and creates an impression of “disorder” rather than merely “difference.” Although the challenges of testing English learners without adverse consequences have long been recognized, they have not been effectively addressed. In the case of language-reduced tests, there were some attempts to simply remove the role of language from the equation altogether, but some influence of language and culture was still required to administer and understand the basic task. Moreover, as noted in chapter 2, Theory and Background, the measurement of language and language-related abilities (e.g., vocabulary acquisition) is simply too relevant and valuable in understanding an individual’s overall academic success and development; as such, the effects of the examinee’s native language and opportunity to learn a language should not be ignored. Doing so, of course, raises the concern that a test may fail to fully represent the entirety of the intended construct; therefore, it becomes subject to adverse, if not discriminatory, consequences in its use with linguistically diverse examinees. This desire to minimize the role of language is a concern because language is a rather unique ability in that its measurement cannot be separated from the language of the test being used to measure it. This fact does not make tests of language inherently invalid because the construct being measured invariably remains the same—language ability. In other words, a test of English vocabulary acquisition administered to someone who does not speak English is not necessarily invalid, as it would clearly indicate that the individual has no vocabulary in English. The score does not indicate, however, that the individual has no vocabulary in any language and herein lies the problem regarding consequences of testing: misinterpretation.

When a test that is designed to measure language is given to individuals who are all native speakers of that language, who have all had relatively equal exposure and opportunity to learn that language, and who have been largely educated in the same manner in that language, then relative performance comparisons can be made. Lower performance rightly indicates some degree of delay in the development or acquisition of language skills and may suggest the presence of a disorder of some kind. When that same test is administered to individuals who are not native speakers of the test’s language, who have not had equal exposure or opportunity to learn the language of the test, or who have been educated in that language but at a point of development that is different than their native speaking monolingual peers, relative performance comparisons are then inherently discriminatory. Lower scores in such cases will often be misinterpreted to suggest possible disorders due to an assumption that the requisite skills and knowledge needed to understand the nature and meaning of the measurement is lacking or unavailable relative to other non-native speakers of the language. However, in reality, low scores may simply reflect a lack of opportunity or experience, rather than an impairment related to language. The result is misidentification of intrinsic deficits and assumptions regarding lack of ability, both of which have serious consequences for an individual’s educational trajectory and programming.

In general, test publishers do not have total control over the manner in which their tests are used and interpreted. Many publishers make good faith attempts to warn users to either not use their tests with individuals who are not properly represented in the norm sample, or to take into account the salient variables that could potentially alter interpretation of the derived scores. For whatever reason, however, none of this has stemmed the tide of misidentified English learners through the use of language-based tests, as indicated by the persistently and disproportionately high amount of English learners identified as having disabilities in the U.S. The most obvious solution to the dangerous and negative consequences of language testing with English learners is the creation of normative samples that comprise individuals who are not monolingual (i.e., bilinguals) and who can be matched to examinees across different degrees of exposure to the test’s language and opportunities to learn. In addition, if the degree of exposure is indexed to a language that all individuals in the normative sample have in common, then there is a clear direction for the creation of a true peer group that has the potential to eliminate the adverse consequences associated with the testing of non-native speaking individuals by ensuring the validity of individual test score interpretation.

Based on the aforementioned factors, the concept of dual norming was predicated in the initial design and construction of the Ortiz PVAT. When considering issues related to access to early language learning experiences and vocabulary acquisition, it was clear that English should serve as the language of administration for the test, as it is the one in which every individual in the U.S. will have common opportunity for learning. It also serves as the standard by which academic attainment and educational growth is typically measured. It is not sufficient, however, to simply gather a sample of English learners and construct a normative sample among them that is stratified based on the usual demographic characteristics. Doing so would imply that English learners are comparable merely because they are English learners when, in reality, some individuals have far more exposure and developmental proficiency in English than other English learners of the same age. The unique quality of language learning is that it can begin at any point after birth, and therefore exposure to English is a variable that must be accounted for in the stratification of the English Learner normative sample. Unlike monolingual English speakers, where age is a sufficient variable to control for comparability of language exposure and opportunity for learning, the same cannot be said for English learners. Thus, while the establishment of a normative sample for native English speakers is relatively straightforward, doing the same for English learners requires incorporation of exposure to English. As discussed in chapter 2, Theory and Background, typical two-dimensional criteria for the construction of a normative sample appropriate for English learners is inadequate and must be stretched to three dimensions by incorporating differential exposure to English. Thus, the development of dual norms is not merely a concession to the idea that English learners require their own true peer group, but is also intended to recognize that English Learner norms are inherently different and far more complicated than typical normative samples. However, this is precisely what is necessary to address the very concerns that are raised in terms of the consequences of testing with English learners. The construction of dual norms for the Ortiz PVAT enables non-discriminatory evaluation of vocabulary acquisition in both native English speakers and English learners alike.

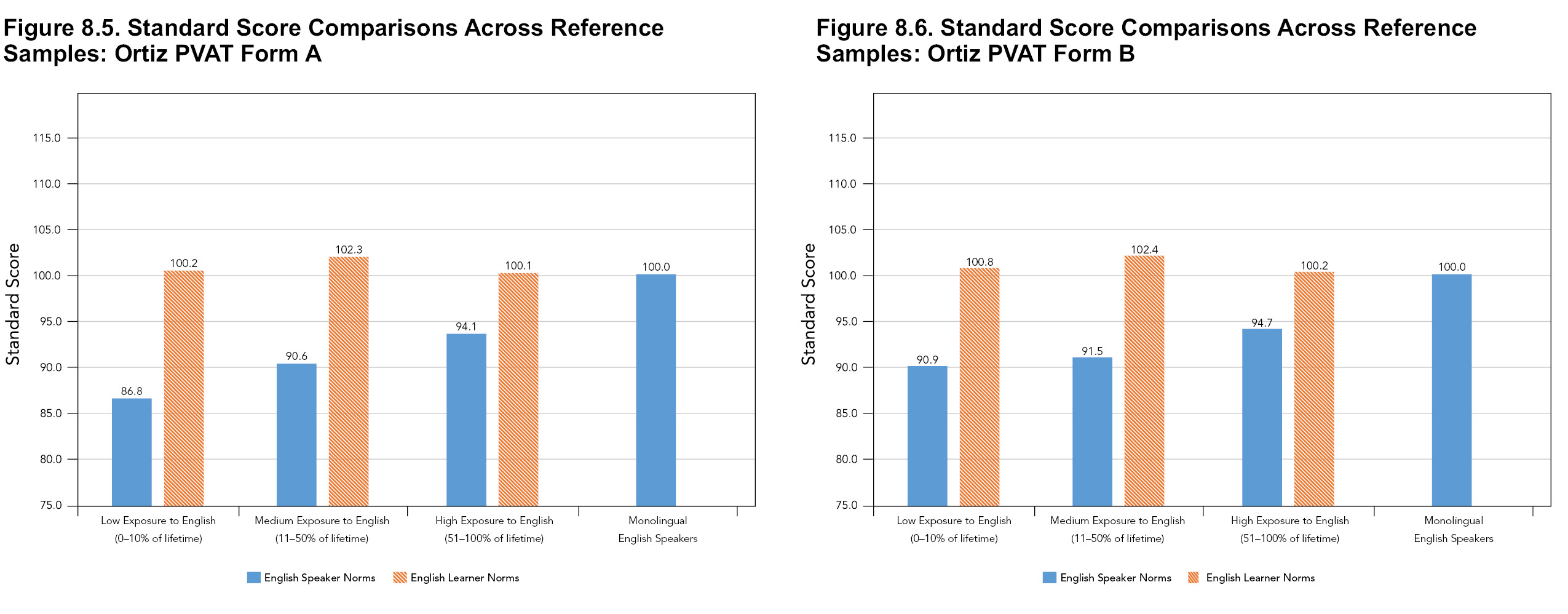

In order to properly model the rate of acquisition and varying ability associated with different levels of exposure to English, it was critical to account for exposure to English in the scoring procedure for English Learner standard scores (see chapter 7, Standardization, for more details about the creation of standard scores). When comparing the scores of English learners using the English Speaker norms, the need for separate norms became clear. As seen in Figures 8.5 and 8.6, when scored against the English Speaker normative sample, individuals in the English Learner normative sample scored consistently below 100 (the English Speaker normative sample mean), especially when exposure to English was low (i.e., 0–10% of the lifespan). The highest exposure group (i.e., 51–100% of the lifespan) most closely mirrors scores from monolingual English speakers, as expected due to their substantial amount of exposure to English. However, when scored with the English Learner norms, scores of individuals in the English Learner sample across all levels of exposure are classified in the Average range (standard score = 90–109). The results demonstrate that English learners achieve developmentally typical scores when compared against a valid reference group of multilingual peers and when exposure is factored into the scoring process. This finding supports the validity of dual norms and the necessity of creating two reference samples, emphasizing the importance of considering the fairness of the comparison group when interpreting scores.

Generalizability Across Groups

Investigation of the assessment’s sensitivity to individual characteristics other than vocabulary ability is an additional source of evidence for the fairness of the Ortiz PVAT (AERA, APA, & NCME, 2014). To examine the generalizability of the scores, the effects of demographic group membership were analyzed by a comparison of means. A series of ANCOVAs was conducted to compare scores across demographic variables (i.e., gender, PEL, and racial/ethnic groups for the English Speaker normative sample; gender, PEL, and language spoken for the English Learner normative sample). Standard scores (examined separately for English Speaker and English Learner normative samples) were the dependent variables, the target demographic variable was the independent variable in each analysis, and additional demographic characteristics were statistically controlled. A conservative coefficient alpha level of p < .01 was used to control for Type I errors that could arise from multiple comparisons. In addition to significance levels, measures of effect size (Cohen’s d ratios and partial η2) are included.

Another method of assessing measurement invariance is differential test functioning (DTF). Differential item functioning (DIF) was assessed during the item selection phase of the Ortiz PVAT (see chapter 6, Development), and items that displayed statistically significant and practically meaningful DIF were removed from the final item pool. Similar to DIF analyses but at the overall test level, DTF measures whether the overall test score is truly measuring the same construct for different groups by comparing test response functions for each group (Chalmers, Counsell, & Flora, 2016). A test response function is similar to an item characteristic curve, such that it plots receptive vocabulary ability against observed scores and yields a graph of the expected total score as a function of a given ability level. In the event that a DTF analysis reveals different test response functions, test validity would be compromised, highlighting the potential for bias. Therefore, DTF analyses were conducted for all demographic variables (gender, PEL, and racial/ethnic groups for the English Speaker sample; gender, PEL, and language spoken for the English Learner sample).

Demographic Effects for English Speakers

Gender

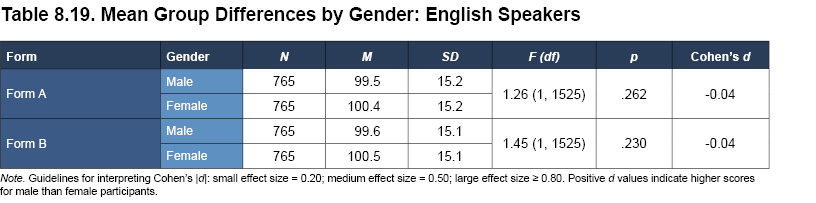

With respect to scores on Form A and Form B, possible gender differences were investigated in the English Speaker normative sample, with region, PEL, and race/ethnicity included as covariates to control for their possible effects. For both forms, there was no evidence of a statistically significant difference between male and female examinees (see Table 8.19). The main effect of gender was not significant and effect sizes were negligible (Cohen’s d = -0.04 for both Form A and Form B), demonstrating that the measurement of receptive vocabulary comprehension with the Ortiz PVAT is unaffected by gender.

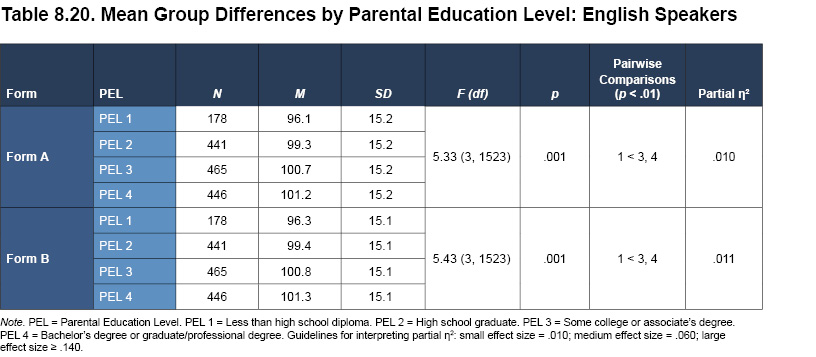

Differences by gender were also investigated with DTF. The graph of each gender’s expected raw score, as a function of an individual’s receptive vocabulary ability, is presented for Forms A and B in Figures 8.7 and 8.8, respectively. For both forms, there is considerable overlap between the curves that represent each gender. Because the graph for male and female participants is so similar, both in slope and position, there is no evidence of bias; that is, the underlying ability of receptive vocabulary is being measured equally for each gender.

Parental Education Level

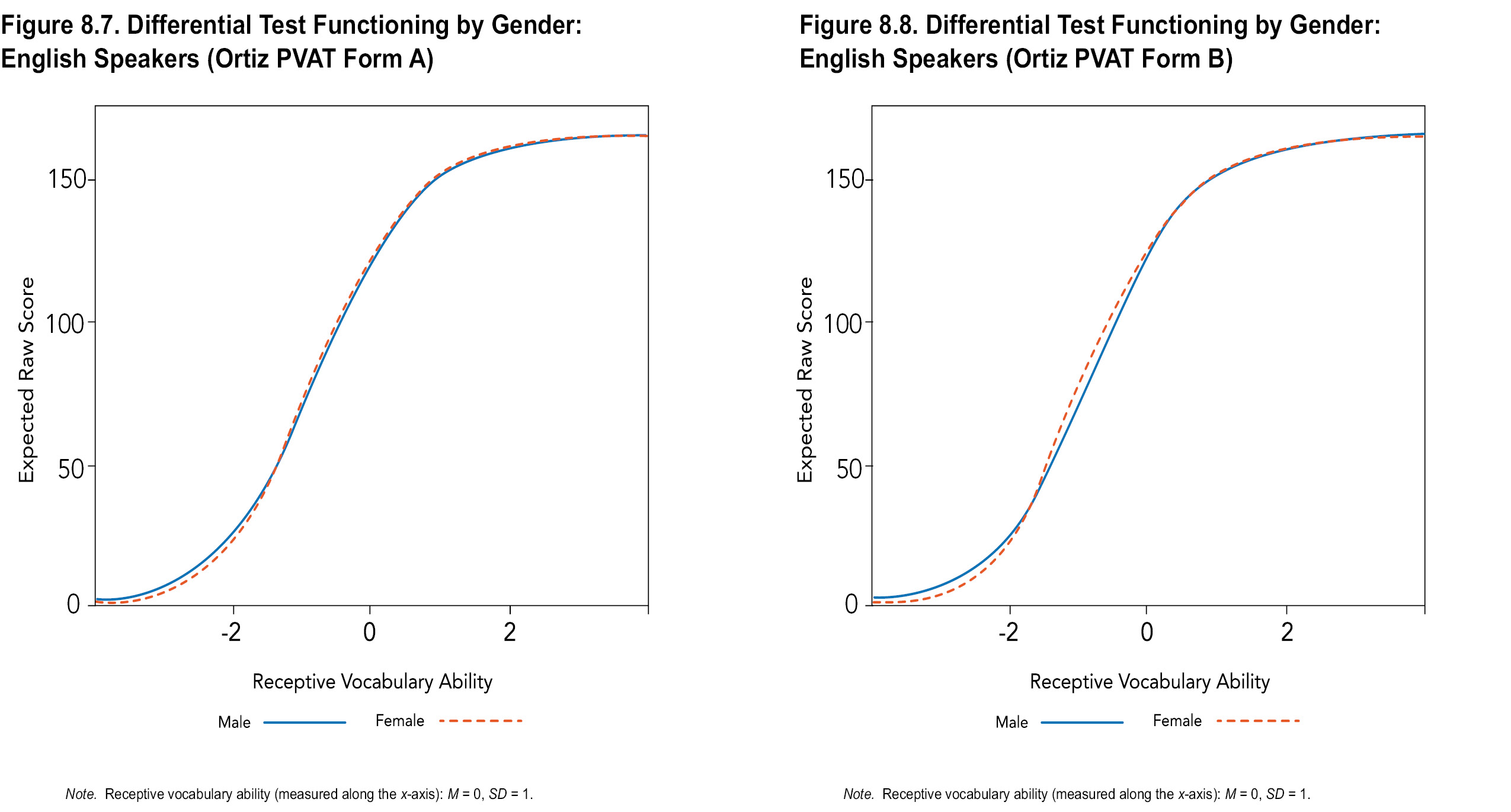

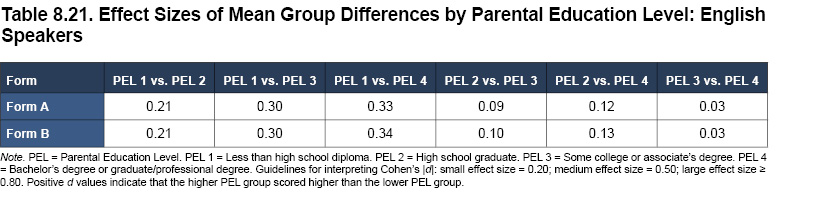

PEL is understood to influence a child’s vocabulary (e.g., Hart & Risley, 2003), such that increased PEL is associated with an increase in youth vocabulary size. Differences in scores by PEL were investigated in the English Speaker normative sample. Additional demographic variables (i.e., gender, geographic region, and race/ethnicity) were entered as covariates in order to control for possible confounding effects. An overall main effect was observed, indicating that there are significant differences between the four levels of PEL (see Table 8.20), but the size of this effect is very small.

In light of the overall effect, pairwise comparisons were examined and the size of the differences was assessed with Cohen’s d effect size ratios (see Table 8.21). Small differences were observed between the lowest PEL (Less than high school diploma) and the two highest levels (Some college or associate’s degree and Bachelor’s degree or graduate/professional degree); higher scores were observed for examinees with parents who had higher levels of education relative to examinees with parents who did not have a high school diploma. The trend of these effects is in line with existing research that higher levels of education for a parent yield greater vocabulary ability in their children (e.g., Sénéchal & LeFevre, 2002; see also Incidental Learning Experiences in chapter 2, Theory and Background).

Although group mean score differences by PEL were observed, this finding alone does not indicate the potential for bias. Therefore, DTF by PEL was also investigated to explore beyond mean differences. In light of the observed mean group differences between parents without a high school diploma and parents with education beyond high school, the four levels of PEL were grouped into two broader categories: (1) Less than high school diploma and High school graduate (N = 619), and (2) Some college or associate’s degree and Bachelor’s or graduate/professional degree (N = 911). The DTF graph of the two PEL groups’ expected raw score, as a function of receptive vocabulary ability, is presented for Form A and Form B in Figures 8.9 and 8.10, respectively. For both forms, the curves for both PEL groups are parallel and close together, demonstrating the lack of variance in the measurement of vocabulary ability across PEL groups. The high degree of congruence reinforces the fact that the significant effect of group mean score differences by PEL does not indicate test bias. Rather, PEL is likely to influence an individual’s score on the Ortiz PVAT to some extent (i.e., higher PEL is associated with higher scores, echoing previous research; see chapter 2, Theory and Background, for more information); however, the Ortiz PVAT accurately captures the same construct of receptive vocabulary acquisition for individuals with both lower and higher PEL, given the similarity of the DTF curves for each group.

Race/Ethnicity

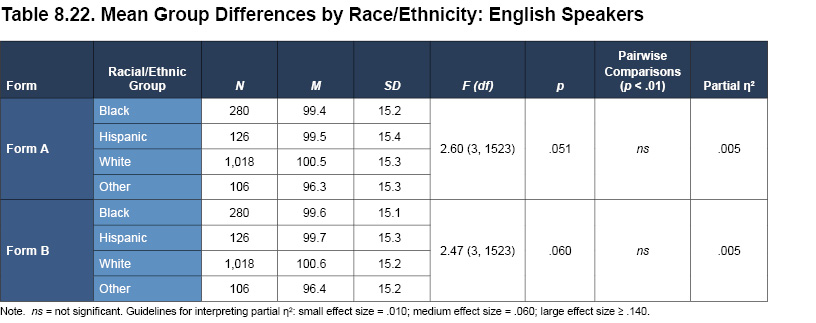

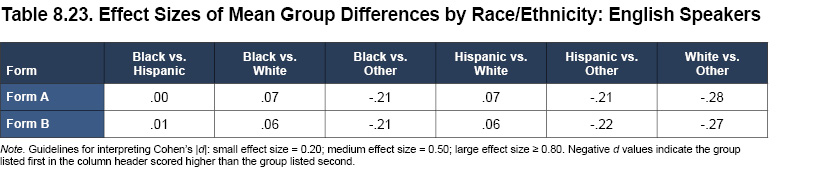

Differences across racial/ethnic groups on the Ortiz PVAT were analyzed in the English Speaker normative sample. Four groups (as defined by major U.S. Census categorizations: Black, Hispanic, White, and Other [includes Asian, Native, Multiracial, and Other]) were tested for meaningful differences in standard scores, while controlling for the effects of gender, geographic region, and PEL. The results are summarized in Table 8.22. No statistically significant effects were found for Form A or Form B, and effect sizes were negligible to small (see Table 8.23; median Cohen’s d = -0.10 for pairwise comparisons). These results provide evidence for the similarity of scores across racial/ethnic groups on both Ortiz PVAT forms.

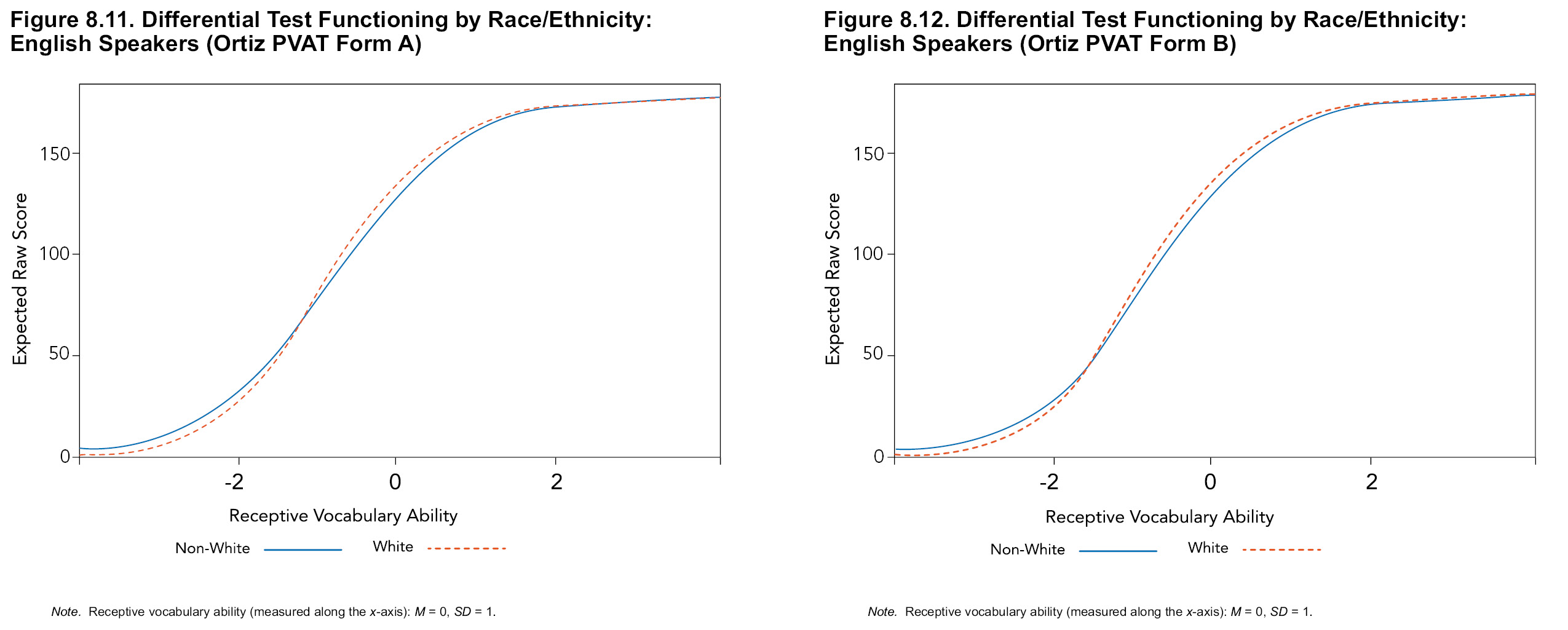

In addition to mean group differences, potential for bias through test functioning was investigated. To test invariance with DTF for racial/ethnic groups, the reference group was defined as White individuals. Hispanic, Black, and Other individuals were combined in the Non-White focal group. Figure 8.11 presents the response function for the English Speaker normative sample for Form A, with a curve plotted for each group; see Figure 8.12 for Form B results. Visual inspection of the graphs indicates how similarly the two groups score, supporting the congruence of measurement across racial/ethnic groups. Although the lines in these figures cross, the difference between the curves of the two groups is slight; that is, items at one point may slightly favor one group, while items at a different point may incrementally favor the other group. Overall, these effects cancel out and the net impact is negligible (Chalmers, Counsell, & Flora, 2016). This finding provides further support for the generalizability of the test, demonstrating invariance by racial/ethnic group and a lack of empirical measurement bias.

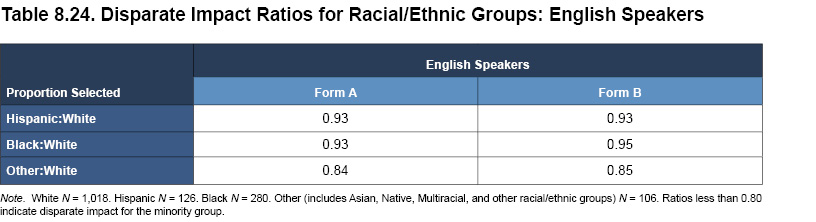

Special Topic: Disparate ImpactTo further demonstrate the fairness of the Ortiz PVAT, the disparate impact of the Ortiz PVAT scores was evaluated. Disparate impact was assessed with the four-fifths rule (Fassold, 2000). This rule, commonly applied in employment and educational contexts, identifies practically meaningful differences between scores for different racial or ethnic groups. The ratio of the percentage of a minority group that attains a desirable outcome, relative to the percentage of the majority group achieving the same outcome, was evaluated. If the ratio is lower than .80, the risk of disparate impact is considered substantial, indicating the possibility of bias against the minority group such that their likelihood of attaining a desirable outcome is less than four-fifths that of the majority group (Uniform Guidelines on Employee Selection Procedures, 1978). For the Ortiz PVAT, possible disparate impact was evaluated wherein White individuals represented the majority group, and Hispanic, Black, or individuals from all other racial/ethnic groups represented the minority groups. A desirable outcome was defined as achieving a standard score of 90 or greater, classified as Average or greater. First, the percentage of individuals with standard scores equal to or greater than 90 was computed for each racial/ethnic group within the English Speaker normative sample, and then the ratio of these values between the majority and each minority group was calculated (see Table 8.24). In accordance with the four-fifths rule, no evidence of disparate impact was found for any of the racial/ethnic group comparisons. |

Demographic Effects for English Learners

Gender

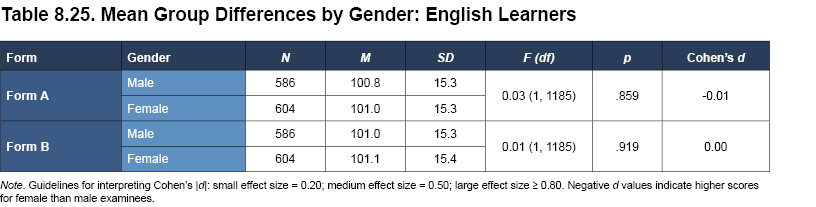

Analyses were conducted to test the relation between scores on the Ortiz PVAT and gender. For scores on Form A and Form B, gender differences were investigated in the English Learner normative sample, with region, PEL, and language spoken included as covariates to control for their possible effects. For both forms, there was no evidence of gender differences (see Table 8.25). The main effect of gender was not significant, and effect sizes were negligible, demonstrating that receptive vocabulary comprehension ability is measured in a similar manner across genders.

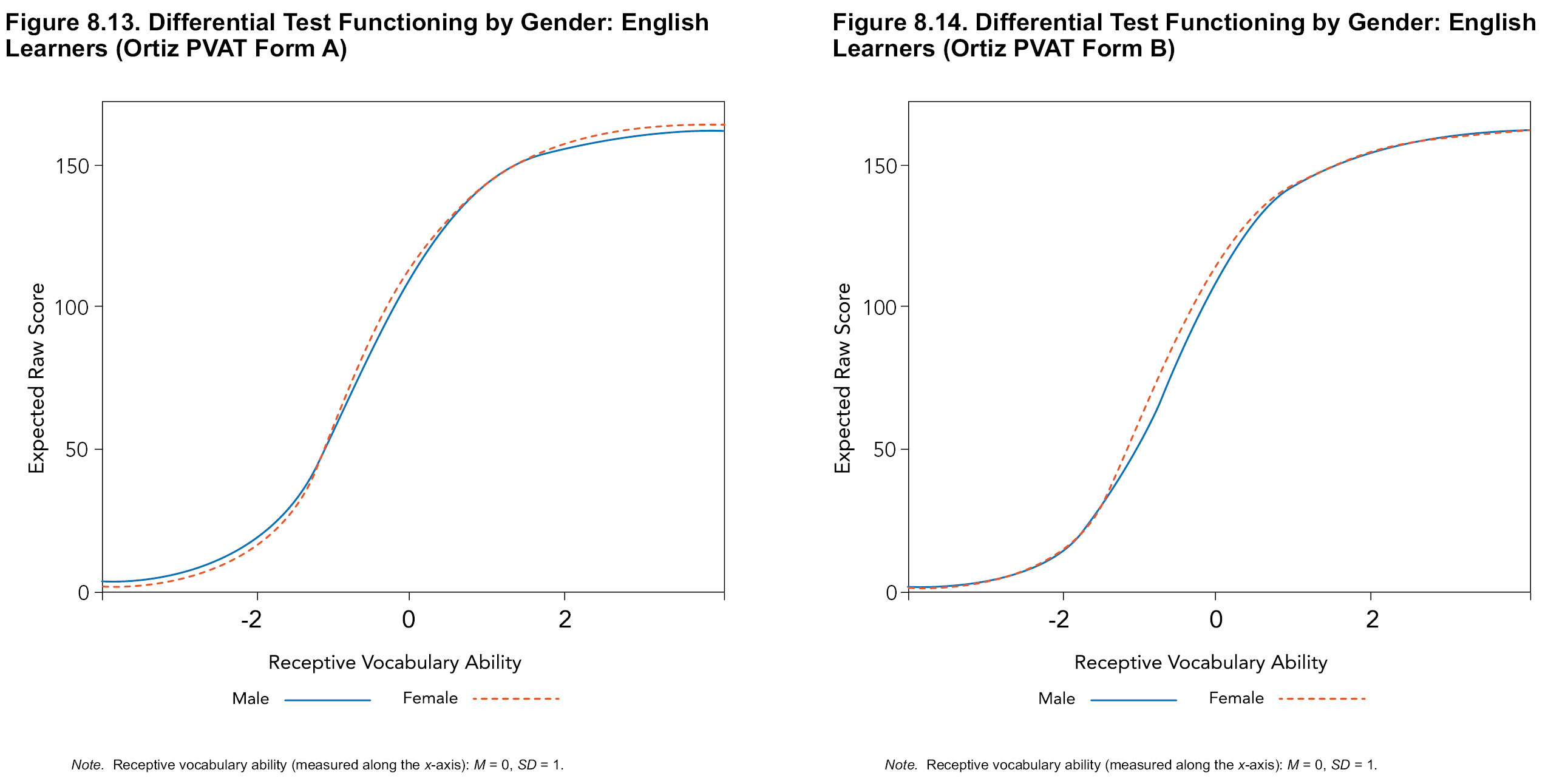

DTF graphs of each gender’s expected raw score as a function of receptive vocabulary ability are shown for Form A and Form B in Figures 8.13 and 8.14. The graphs for male and female examinees in this sample are remarkably parallel, indicating that neither Form A nor Form B functions differently by gender. The Ortiz PVAT measures receptive vocabulary ability in the same manner for both genders of the English Learner normative sample. This finding provides support for the invariance of the test by demonstrating a lack of empirical bias with regard to gender groups.

Parental Education Level

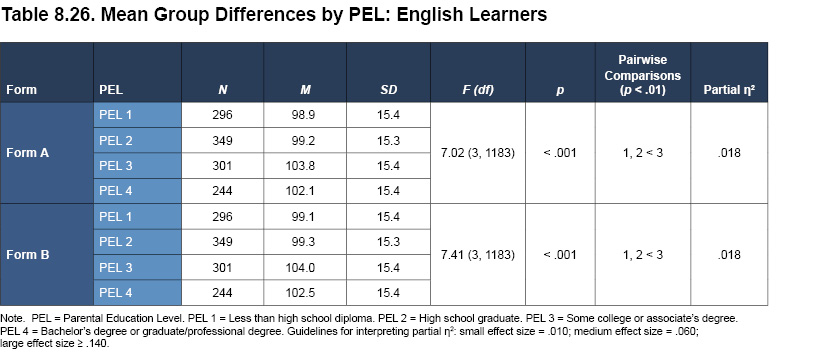

The vocabulary of children is influenced by PEL (Hart & Risley, 2003); therefore, it is important to consider differences in scores by PEL for the English Learner sample. Gender, geographic region, and language spoken were included as covariates in order to control for possible confounding effects. An overall main effect was observed for both forms, such that differences between the four levels of PEL exist (see Table 8.26), but the size of this effect is small. The result of this small effect size is that although differences exist, they may not be substantial or notable in practice.

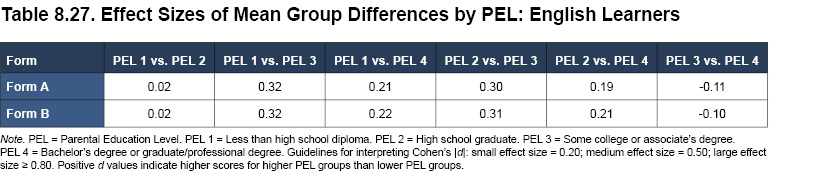

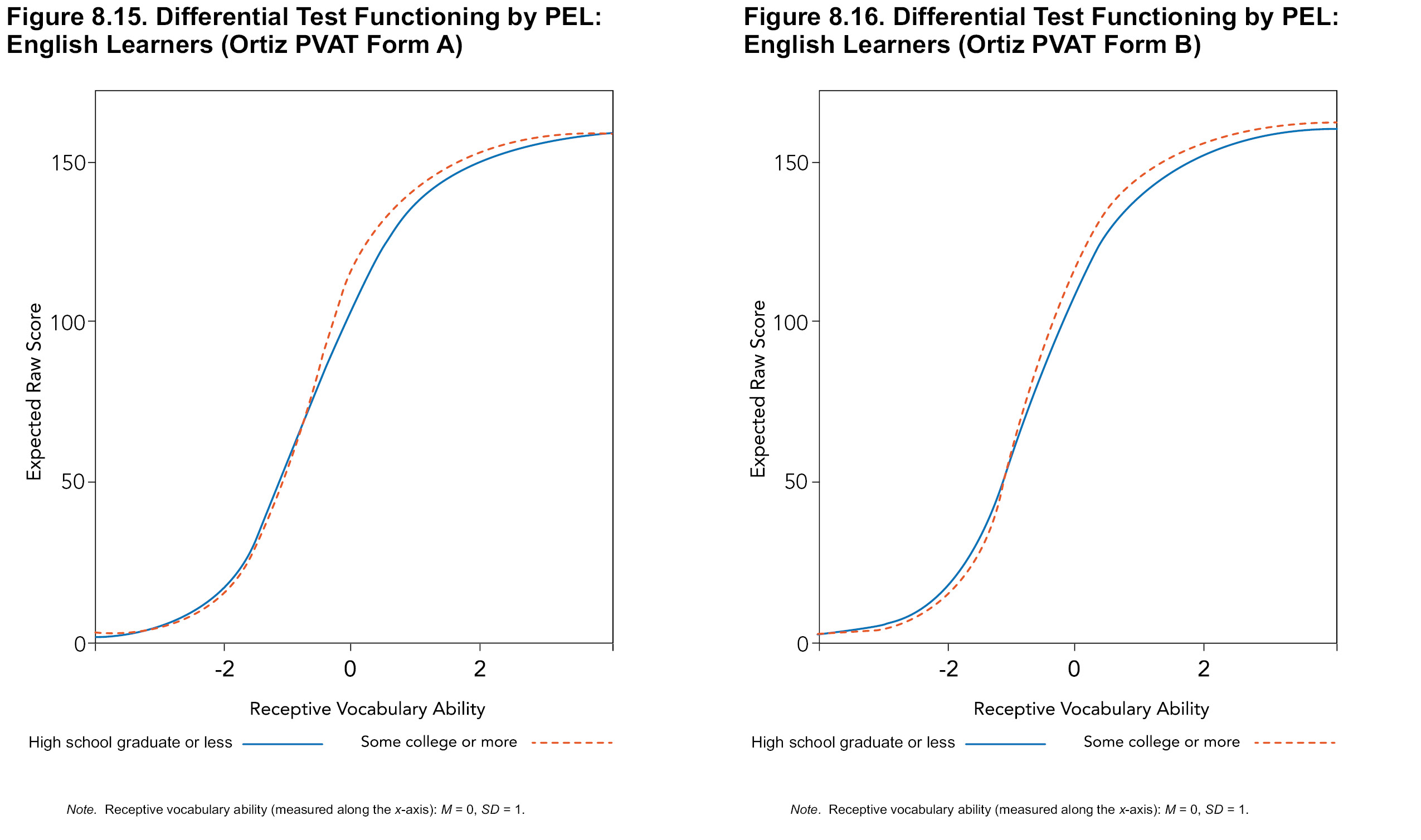

In light of the overall effect, pairwise comparisons were examined and the size of the differences were assessed with Cohen’s d effect size ratios (see Table 8.27). Medium-sized differences were observed between individuals with Some college or associate’s degree and those with the two lower education levels (i.e., Less than high school diploma and High school graduate). The trend of these effects is in line with existing research showing that higher levels of education for a parent positively contributes to greater vocabulary ability in their children (e.g., Sénéchal & LeFevre, 2002; see also Incidental Learning Experiences in chapter 2, Theory and Background).

Although an effect of PEL was expected and observed in the English Learner normative sample, this result alone does not indicate test bias. DTF was investigated by PEL to explore the potential for bias beyond group mean score differences. PEL groups were divided into two levels (High school graduate or less and Some college or more); the DTF results are plotted in Figure 8.15 for Form A and Figure 8.16 for Form B. As evidenced by the largely parallel nature of the graphs, the different levels of parental education are not measured differently by the Ortiz PVAT. Rather, the slope and position of the lines are nearly identical, indicating congruence of measurement. The curves run very close together and cross at some points; that is, one group may experience a slight advantage at one point in the test, but the other group may receive a slight advantage at a later point. Overall, the effects cancel each other out. For both Form A and Form B, the DTF results provide little evidence of empirical bias in the Ortiz PVAT.

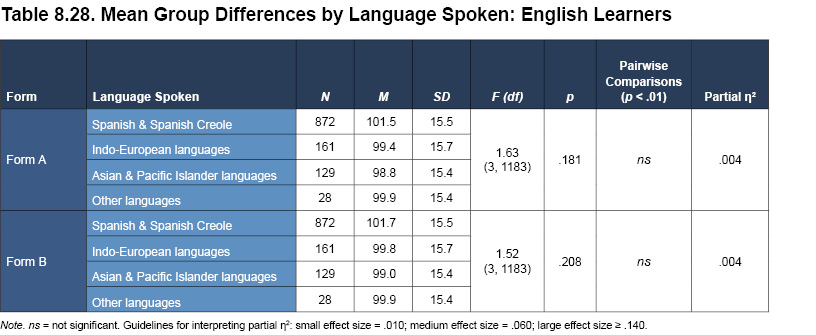

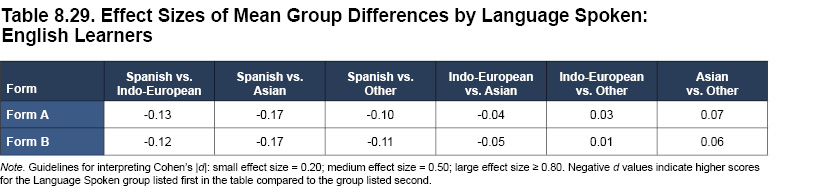

Language Spoken

A unique element of the English Learner normative sample is the stratification of language spoken by the participants. Four major language groups were targeted, in proportions representative of U.S. Census figures: Spanish & Spanish Creole, Indo-European languages (note that this category includes all Indo-European languages other than Spanish), Asian & Pacific Islander languages, and Other languages. Differences in scores between these language groups were analyzed, and the effects of additional demographic characteristics (gender, PEL, and geographic region) were controlled due to their inclusion as covariates. Results are presented in Table 8.28 and 8.29; no statistically significant differences were observed between the language groups. These results are in line with hypothesized expectations; no language group was expected to outperform any other group, given that the Ortiz PVAT is designed to assess receptive vocabulary ability in English only. Note that DTF analyses were not conducted by language group, given the unequal sample sizes as a result of the unequal proportions in the U.S. population.

Summary of Demographic Effects

Results from several analyses provide support for the generalizability of Ortiz PVAT scores. No evidence of test bias was found based on gender, PEL, race/ethnicity (for the English Speaker sample), or language spoken (for the English Learner sample). Together, these results provide strong evidence that the Ortiz PVAT meets the fairness requirements outlined in the Standards.