- Technical Manual

- Chapter 1: Introduction

- Chapter 2: Theory and Background

- Chapter 3: Administration and Scoring

- Chapter 4: Scores and Interpretation

- Chapter 5: Case Studies

- Overview

- Case Study 1: Clinical Evaluation of an English Speaker Suspected of Having a Speech-Language Impairment

- Case Study 2: Progress Monitoring of an English Learner with Suspected Deficits in Verbal Ability and Language

- Case Study 3: Psychoeducational Evaluation of an English Learner with Suspected Specific Learning Disability

- Chapter 6: Development

- Chapter 7: Standardization

- Chapter 8: Test Standards: Reliability, Validity, and Fairness

- Task Instruction Scripts

Validity |

Validity refers to the accuracy of measurement of the intended construct, or the degree to which evidence and theory support the interpretation and intended use of the assessment (AERA, APA, & NCME, 2014). Multiple sources of validity are considered when designing and evaluating an assessment. For the Ortiz PVAT, validity evidence based on test content, internal structure, relations to criterion variables, and relations to other measures were investigated.

Content-Related Validity

Validity based on test content is achieved when there is correspondence between test items and ability associated with the construct being measured (e.g., receptive vocabulary ability on the Ortiz PVAT) and/or the stated objectives of the instrument (Crocker & Algina, 1986).

Content-related validity of the Ortiz PVAT was assessed through extensive subject-matter expert review. The panel of experts included speech-language pathologists and psychologists. All experts had extensive knowledge in language development and the assessment of culturally and linguistically diverse populations. As described in detail in chapter 6, Development, the subject-matter experts independently inspected the test content for appropriateness with regard to the construct of receptive vocabulary. They reviewed the difficulty of the items for the intended age range, visual depiction of the target words and distractors, and potential bias related to gender, race/ethnicity, cultural background, or socioeconomic status. Test content was reviewed to ensure that the items appropriately sample the construct of interest and are suitable for the test’s intended use. Only those items deemed appropriate by the subject-matter experts were included in empirical analyses to explore their ability to accurately assess receptive vocabulary comprehension. The resulting item pool as determined by the expert reviewers provided support for the content-related validity of the retained items on the Ortiz PVAT.

Internal Structure

The underlying structure of the Ortiz PVAT items was investigated to provide evidence for the unidimensional nature of receptive vocabulary, and to evaluate an assumption of IRT analyses (AERA, APA, & NCME, 2014). The Ortiz PVAT was hypothesized to assess a unidimensional construct, best explained by a single-factor model, as all items were designed to measure the same central construct of receptive vocabulary knowledge. The dimensionality of the Ortiz PVAT was explored using full-information item factor analysis (analyzed via the mirt R package; Chalmers, 2012). Results for a one-factor model were favorable, showing high factor loadings for all items (values ranged from .428 to .956). To further explore the appropriateness of the one-factor model, results were compared against a two-factor model. Although the two models differed significantly (χ2 [307] = 5422.2, p < .001), an inspection of the loadings onto the two-factor model revealed that the two factors simply comprised easier and harder items, respectively. The two-factor solution may lend support to the theorized structural distinction between Basic Interpersonal Communication Skills (BICS) and Cognitive Academic Language Proficiency (CALP) vocabulary words, as discussed in chapter 2, Theory and Background. However, the inclusion of the additional complexity of a multifactor model was not justified as it did not improve interpretation over and above a unidimensional model, and so the more parsimonious single-factor model was selected for the Ortiz PVAT.

In addition, assumptions of local dependence were tested through the investigation of a tetrachoric correlation matrix and the associated residual error terms. The inter-item correlations ranged from -.47 to .73 (median r = .044), suggesting related content without statistical redundancy, and residual values were low, ranging from -1.9 to 0.9. To further explore the dimensionality of the assessment, factor extraction via PARAMAP (O’Connor, 2016) was used to examine the size of the inter-item correlations post-extraction of the first factor. Because the single factor of receptive vocabulary acquisition should explain the relationship between items, inter-item correlations were expected to be reduced after extraction. In this analysis, a decrease in correlation coefficients was observed (only 2% of residual inter-item correlations were higher than .30; median r = .046).

Together, these results indicate that a single underlying construct appears to explain the interrelatedness of the items and provide support for the unidimensionality of receptive vocabulary acquisition as assessed by the Ortiz PVAT.

Relations to Other Variables

Beyond content-related and structural evidence, additional evidence for the validity of an assessment is derived from its associations with criterion variables and with conceptually related constructs (AERA, APA, & NCME, 2014). First, evidence regarding relations to criterion variables (also called predictive or criterion-related validity) refers to whether the scores demonstrate meaningful and expected relationships to specified external criteria or outcomes, such as group membership or clinical diagnosis (Crocker & Algina, 1986). Second, evidence regarding conceptually related constructs (formerly termed convergent validity) refers to whether test scores demonstrate relations with scores from other established measures and/or behaviors that are expected to be associated with the construct in question. This type of validity evidence is crucial in demonstrating that the construct is properly measured (i.e., accurately portrays all aspects of the construct of interest and relates to other measures as expected; Crocker & Algina, 1986).

Relation to Clinical Diagnoses

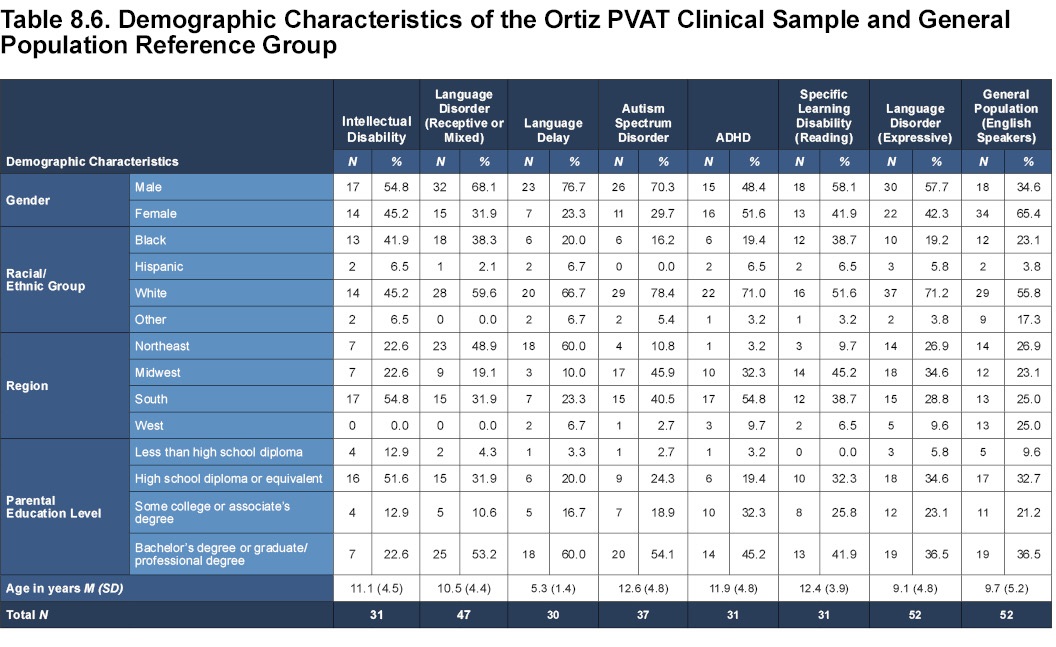

In order to extend the results beyond the general population presented in the normative studies, a clinical validation study was conducted to explore the appropriateness of using the Ortiz PVAT with special populations. For the clinical sample (N = 278), diagnostic information was obtained from a licensed professional (e.g., clinical psychologist, speech-language pathologist, or school psychologist). Data from children, youth, or young adults diagnosed with a specific psychological or communication disorder (e.g., language disorder, anxiety, depression) were included in the analyses if the following criteria were met: (a) a single primary diagnosis was indicated, (b) the diagnosis was made by a qualified professional, and (c) appropriate methods (e.g., record review, rating scales, observation, or interviews) were used during diagnosis. For all clinical cases, relevant criteria were considered by the clinical professional using the Diagnostic and Statistical Manual of Mental Disorders (DSM-IV-TR; APA, 2000; DSM-5; APA, 2013), the International Statistical Classification of Diseases and Health Related Problems 10th Revision (ICD-10; World Health Organization, 2015), or the Individuals with Disabilities Education Act (IDEA, 2004).

The clinical sample included the following clinical groups that were hypothesized to have some degree of difficulty with receptive vocabulary acquisition (based on its inclusion in DSM diagnostic criteria for these clinical groups; APA, 2013) and therefore lower scores on the Ortiz PVAT:

- Intellectual Disability (ID)

- Language Disorder with receptive (or mixed receptive-expressive) impairment

- Language Delay with receptive (or mixed receptive-expressive) impairment

A separate clinical sample for individuals with Autism Spectrum Disorder (ASD) was collected. The diagnostic criteria for ASD indicates potential impairment in language ability and acquisition, but does not specifically describe whether the deficit is related to expressive or receptive language (APA, 2013). As such, this group was expected to score somewhat lower than the General Population (due to broad language-related impairment) but not as low as those with specific receptive impairment, as described in the diagnostic criteria of the aforementioned groups.

Additionally, data were collected from the following clinical groups that typically do not have problems, at a group level, with receptive vocabulary ability (i.e., the DSM does not list receptive impairment as a symptom; APA, 2013); these groups were expected to have scores close to average on the Ortiz PVAT:

- Specific Learning Disability with impairment in reading

- Attention-Deficit/Hyperactivity Disorder (ADHD; including Predominantly Hyperactive presentation, Predominantly Inattentive presentation, and Combined presentation)

- Language Disorder with expressive impairment

Participants in this sample were categorized as monolingual English speakers by meeting the same criteria as the English Speaker normative sample (see chapter 7, Standardization, for details about inclusionary criteria for the sample). The demographic characteristics of the clinical samples, as well as a randomly selected sub-sample of the English Speaker normative sample used as a reference group (referred to as the General Population), are presented in Table 8.6.

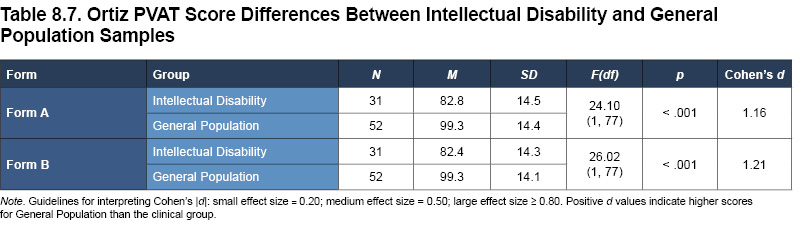

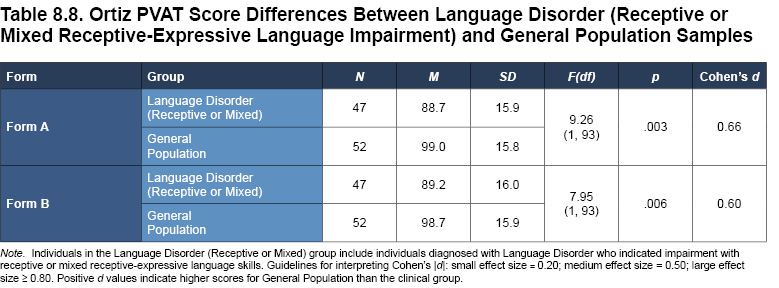

To explore these results in more detail, a series of analyses of covariance (ANCOVAs) was conducted, comparing standard scores of each clinical sample with the General Population group. The standard score, on Form A or Form B, was the dependent variable, clinical diagnosis (or absence of diagnosis) was the independent variable, and relevant demographic characteristics (i.e., gender, race/ethnicity, geographic region, and parental education level [PEL]) were included as covariates to control for their possible effects. A conservative alpha level of p < .01 was used to evaluate statistical significance in order to control for Type I errors. In addition to significance levels, standardized mean difference (Cohen’s d effect size ratios) were evaluated when comparing each clinical sample to the General Population reference group. The following tables present the results of the ANCOVAs, along with the means, standard deviations, and standardized mean differences (Cohen’s d effect sizes). Because covariates were included in the analyses, estimated marginal means are reported to best describe the means when controlling for additional variables.

As seen in Tables 8.7 to 8.9, medium-to-large effect sizes were observed (as expected) when comparing the General Population to three of the four clinical groups with DSM diagnostic criteria related to receptive language ability. Across the Intellectual Disability, Language Disorder (Receptive), and Language Delay clinical groups, the median effect size was large (Form A: median d = 0.66, Form B: median d = .60; range of effect sizes across forms: 0.48–1.21), demonstrating clear difference in ability between each of these groups that would be expected to display impairment in receptive language ability (based on theory and past research) compared to the General Population that would not be expected to display any such impairment.

The largest effect sizes were seen in the Intellectual Disability group, who scored more than a standard deviation lower than the General Population group (Form A: d = 1.16; Form B: d = 1.21). Low scores were expected for this group because of the strong relationship between vocabulary and general cognitive ability.

Individuals with Language Disorder who indicated receptive or mixed receptive-expressive language-related impairment also scored substantially lower than the General Population (Form A: d = 0.66; Form B: d = 0.60). This difference is of particular interest, as a main goal of the development of the Ortiz PVAT was to differentiate between those with and without receptive language impairment.

Smaller, but notable, effects were found for youth identified with a Language Delay, as their Ortiz PVAT scores were lower than the General Population (Form A: d = 0.51; Form B: d = 0.48).

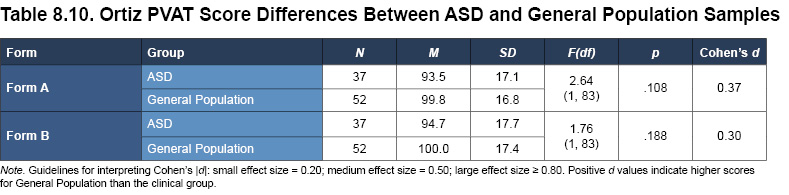

Individuals with ASD may be expected to show language deficits in social communication, often exhibited as limited speech or the use of single words or short phrases. Note however, that although ASD can feature language deficits, these problems may not manifest as deficits in receptive vocabulary ability. ASD is a spectrum comprising a variety of behaviors and impairments, ranging from mild to severe; some individuals with this diagnosis may not exhibit marked difficulty specifically with receptive vocabulary acquisition. Indeed, as seen in Table 8.10, results show that the ASD sample scored lower on the Ortiz PVAT than the General Population sample; however, this difference was small and nonsignificant (Form A: p = .108, d = 0.37; Form B: p = .188, d = 0.30). When taking a closer look, however, the ASD sample can be divided into individuals with receptive or mixed receptive-expressive impairment (n = 15) and individuals with expressive impairment (n = 22). As would be expected, the subsample of ASD individuals with receptive impairment (M = 90.9 for Form A and M = 91.3 for Form B) scored lower than those with expressive impairment (M = 96.8 for Form A and M = 99.1 for Form B), yet the sample sizes limit further interpretation. Further research may be required to explore the relationship between receptive vocabulary and ASD.

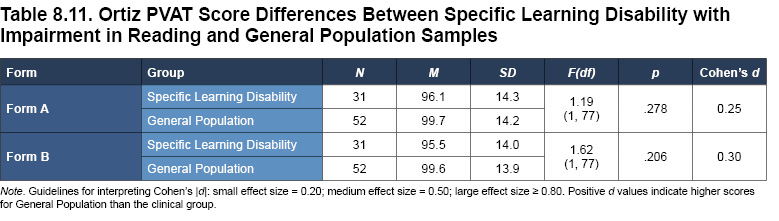

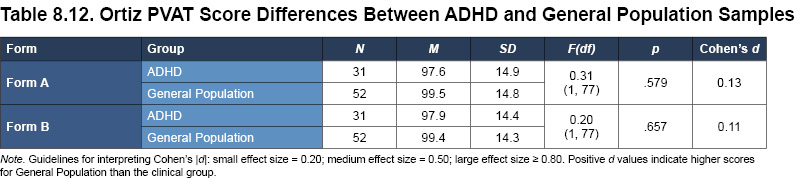

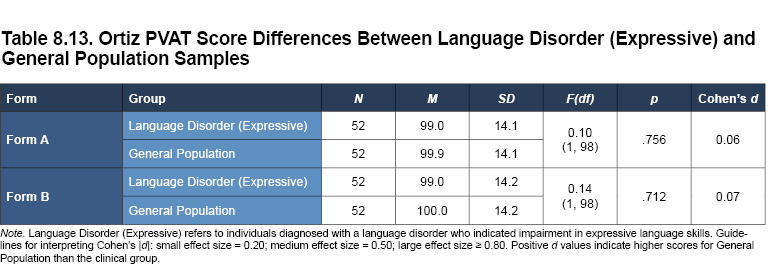

Specific Learning Disability with impairment in reading, ADHD, and Language Disorder (with expressive impairment) are similar disorders to the extent that impairment in receptive vocabulary ability or acquisition is not a diagnostic criterion documented in the DSM. As expected, no significant differences were observed for scores on the Ortiz PVAT between these clinical groups and the General Population (see Tables 8.11 to 8.13). The median effect size across the three groups, relative to the General Population sample, was small (Form A: median d = 0.13, Form B: median d = .11; range of effect sizes across forms: 0.06–0.30), affirming the hypothesized lack of difference.

The clinical group diagnosed with Specific Learning Disability with impairment in reading did not significantly differ statistically from the General Population sample (p > .01; see Table 8.11). Individuals with a reading-related disability are somewhat more likely to be at a disadvantage in terms of vocabulary acquisition, given that reading is often seen as one of the best ways to learn new vocabulary, but receptive vocabulary impairment is not explicitly defined as a symptom or key feature of this disability. The score obtained by the Specific Learning Disability group seems to reflect this disadvantage to a small extent, as it is below the normative sample’s mean of 100 (note the small effect size; Form A: d = .25, Form B: d = 0.30). However, because the Ortiz PVAT is a receptive vocabulary test and no reading is required on the examinee’s part, it is within reason that the average performance of the Specific Learning Disability group is statistically similar to that of the General Population.

As anticipated, individuals with ADHD did not show any receptive language impairment, as their scores did not differ significantly from the General Population and effect sizes were negligible (Form A: p = .579, d = 0.13; Form B: p = .657, d = 0.11; see Table 8.12). This result may be due to the brevity and interactivity of the test format, such that the Ortiz PVAT scores reflect the true receptive language ability of the individuals, rather than being confounded by difficulty with maintaining attention or controlling impulsivity.

Individuals with Language Disorder (Expressive) did not differ from the General Population (p > .01; see Table 8.13); this finding is important to note, given that the Ortiz PVAT is specifically designed to only assess receptive language ability. The Ortiz PVAT is sensitive enough to reflect the true differences in ability between those with receptive impairment and those with expressive impairment related to Language Disorder, as opposed to impairment in vocabulary or language ability in a general sense.

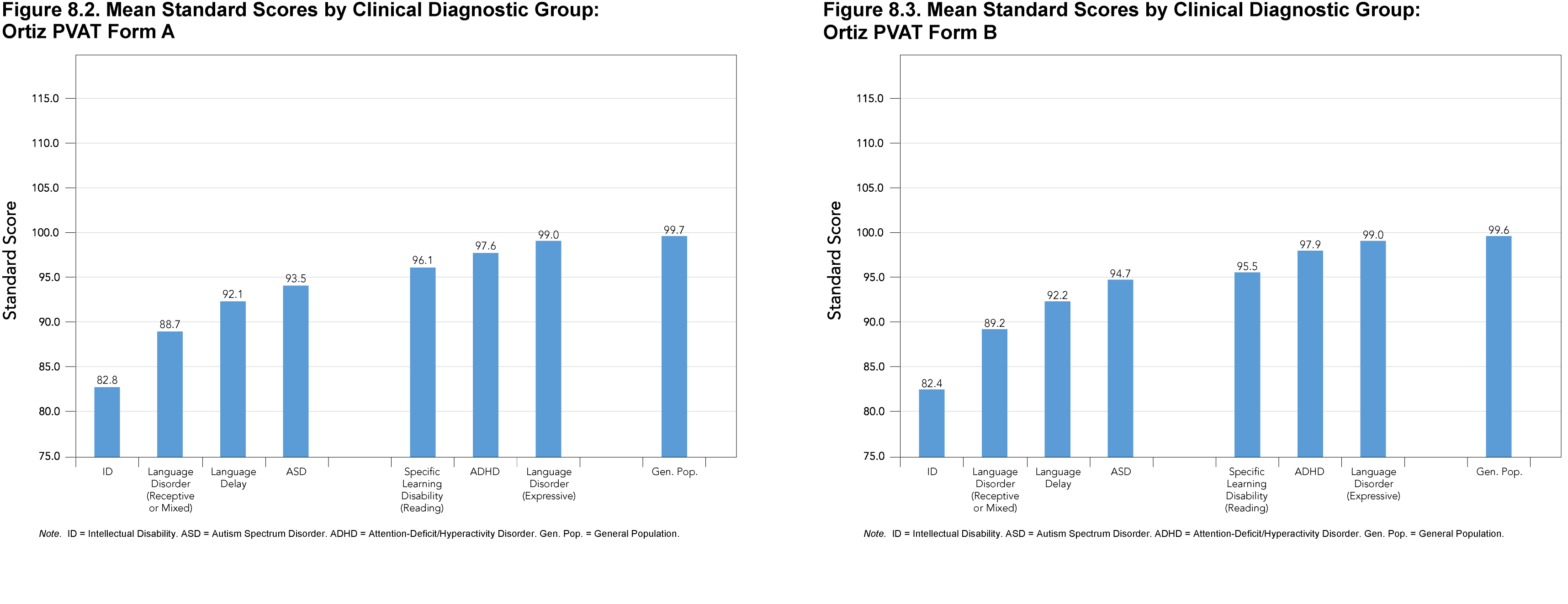

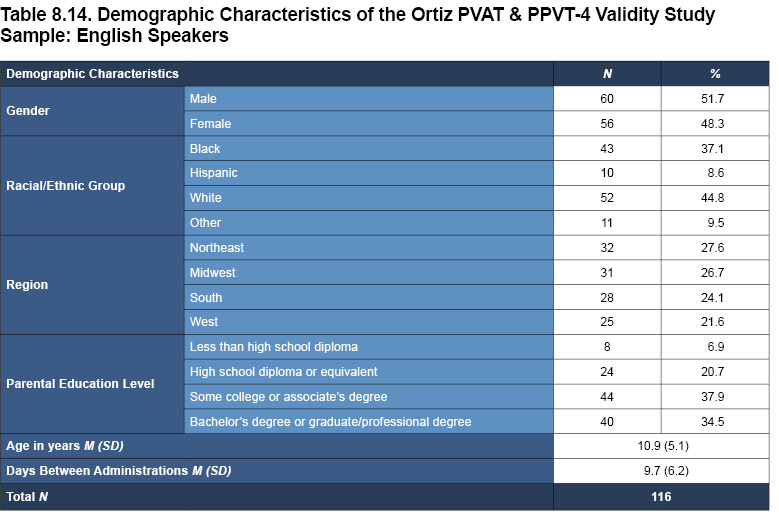

Overall, statistically significant and practically meaningful effects were generally observed for the clinical groups with receptive language impairment as diagnostic criteria in the DSM, and nonsignificant effects were observed for clinical groups for which receptive language impairment is not a DSM diagnostic criterion. Figures 8.2 (Form A) and 8.3 (Form B) depict the differences found between groups, with the clinical groups known to have difficulty with receptive vocabulary acquisition exhibiting lower scores relative to both the General Population, and compared to other clinical groups without receptive vocabulary difficulties. This pattern of results provides support for the validity of the assessment as a measure of receptive vocabulary acquisition and its sensitivity to various degrees of impairment.

Relation to Other Measures of Receptive Vocabulary

Ortiz PVAT and the Peabody Picture Vocabulary Test, Fourth Edition

The association between scores on the Ortiz PVAT and scores on another receptive vocabulary assessment was explored in order to determine the convergence of construct measurement. The Peabody Picture Vocabulary Test, Fourth Edition (PPVT-4; Dunn & Dunn, 2007) is a norm-referenced measure of receptive vocabulary for children and adults, from age 2 years 6 months to age 90 years. The administration of the PPVT-4 is similar to the Ortiz PVAT: the administrator recites the stimulus word, the examinee is presented with four illustrations, and then the examinee selects the illustration that best matches the stimulus word. Similar to the Ortiz PVAT, scores on the PPVT-4 are scaled such that the mean is 100 and standard deviation is 15, and higher scores indicate greater performance and vocabulary ability.

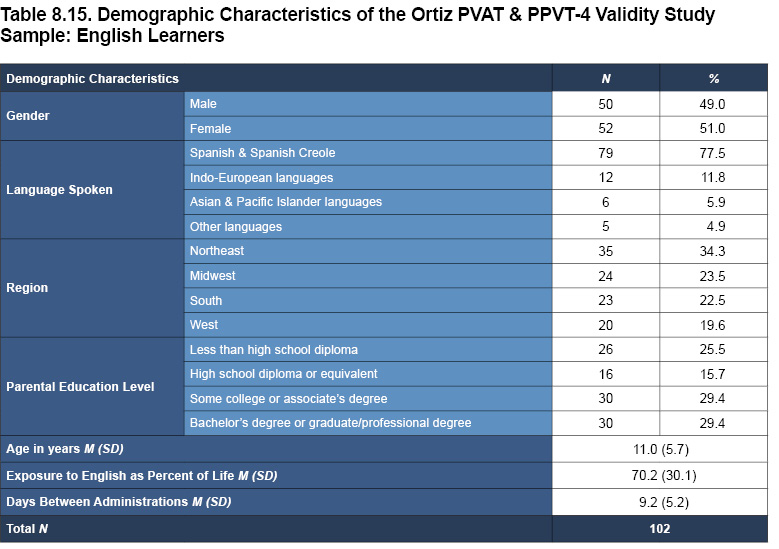

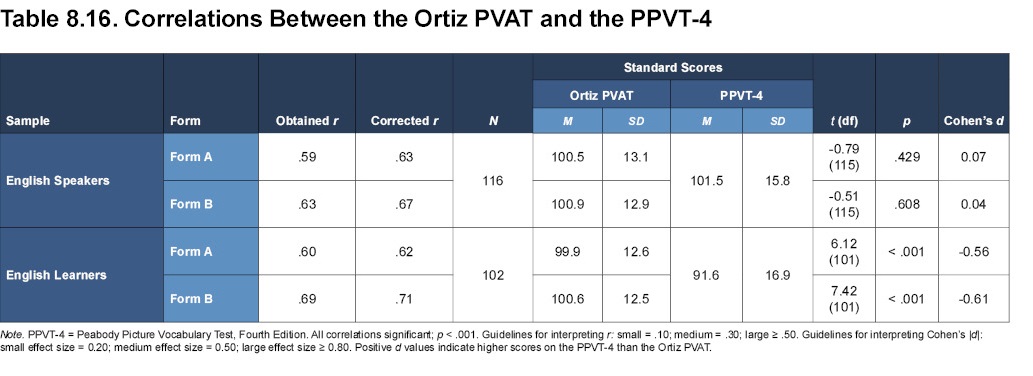

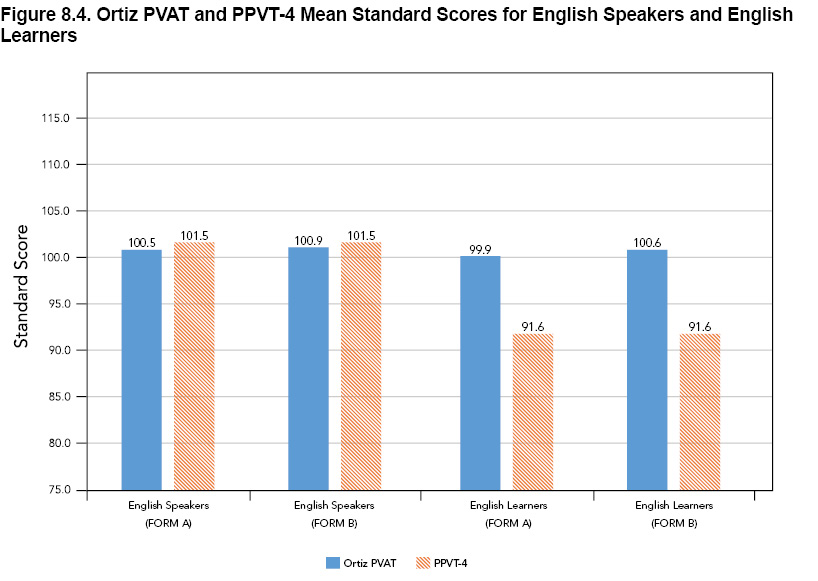

A sample was collected in which General Population (i.e., individuals with no language disorder or other clinical diagnosis) participants completed both the Ortiz PVAT and the PPVT-4. The sample comprised English speakers (N = 116) and English learners (N = 102). Note that the Ortiz PVAT scores for the English speaker sample are based on the English Speaker norms, and the Ortiz PVAT scores for English learners are based on the English Learner norms. For the PPVT-4, scores were compared against the standardization sample, which does not make explicit mention of primary language or exposure to English. The order in which the Ortiz PVAT and the PPVT-4 were administered was counterbalanced, and the two tests were both administered within a two-week period. The demographic characteristics for each subsample are presented in Tables 8.14 and 8.15, reflecting a diverse cross-section of the population.

Because both assessments were designed to measure receptive vocabulary, a moderate-to-high correlation was expected to be observed as evidence of convergent validity. Scores on the two assessments were compared using Pearson’s correlation coefficient. Corrected correlations are also presented in order to adjust for sampling variability and range.

Results from the convergent validity analysis are presented in Table 8.16. For the English Speaker sample, the two assessments demonstrate moderate-to-large positive correlations (corrected r = .63 and .67 for Form A and Form B, respectively; p < .001). Moreover, the mean standard scores on both the Ortiz PVAT and PPVT-4 are close to 100.0 (as expected due to the similarity of this sample’s composition to the general population), and the Cohen’s d estimates of effect size are negligible, indicating no meaningful difference between the two scores. These results provide strong evidence for the similarity of construct measurement across the two assessments.

For English learners, scores on the Ortiz PVAT and PPVT-4 are also sizably and positively correlated (corrected r = .62 for Form A and .71 for Form B; both p < .001), providing further support for the convergence of the two tests as measures of the same construct. However, there is a moderately-sized difference between the mean standard scores on each assessment (d = -0.56 and -0.61 for Form A and for Form B, respectively), with PPVT-4 scores being statistically significantly lower than Ortiz PVAT scores. On the Ortiz PVAT, English learner examinees are scored against an English Learner normative peer group that accounts for each individual’s exposure to English (for more information on the importance of a relevant peer group, see chapter 2, Theory and Background, and the section on Fairness later in this chapter). As a result, and as would be expected of a nonclinical General Population sample, the mean score of the English learners in this sample approximates that of the English Learner normative sample mean (M = 99.9, SD = 12.6), demonstrating typical performance when compared to other English learners. On the other hand, their PPVT-4 scores were demonstrably lower (M = 91.6, SD = 16.9), which may be due to the fact that exposure to English, or being an English learner in general, is not factored into PPVT-4 scores (see Figure 8.4 for a visual summary). This result highlights the diagnostic value of comparing individuals to a relevant peer group (i.e., a separate English learner sample that also accounts for English-language exposure). In this study, the average proportion of exposure to English was substantial (i.e., 70.2% of an individual’s life), yet scores for the English learners were still notably below typical scores of English speakers on the PPVT-4, and differences in scores are likely to be even more dramatic for English learners with far less exposure. The correlation coefficient demonstrates that the rank ordering of English learners’ receptive language ability is similar between the PPVT-4 and the Ortiz PVAT scores, which provides evidence for the congruence of the two measures of receptive vocabulary ability. However, the mean score differences reveal an important distinction that the two assessments are not estimating their abilities in parallel ways. Specifically, English learners scored notably lower on the PPVT-4 than they did on the Ortiz PVAT. This effect is likely due to the same issue noted previously concerning the PPVT-4 norms that do not include English learners as a separate peer group for comparison purposes.

Ortiz PVAT and the Wechsler Intelligence Scale for Children (WISC)

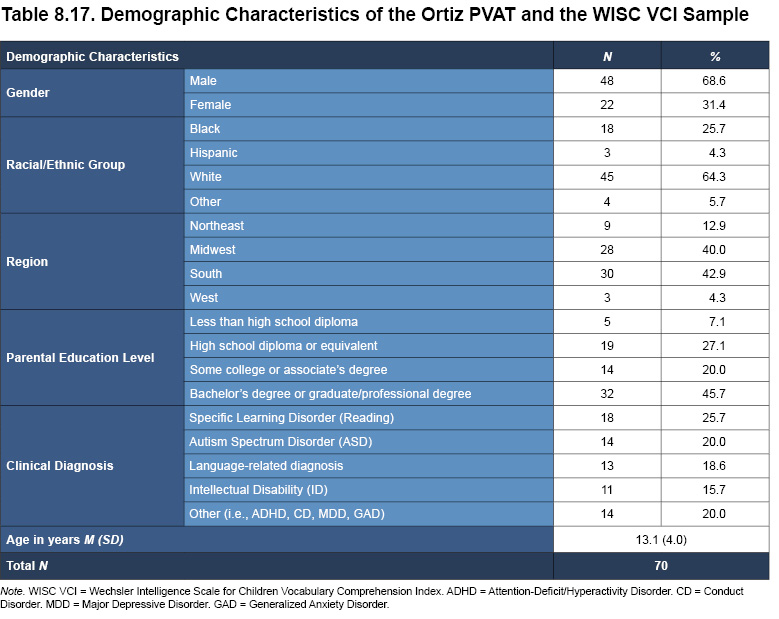

Verbal intelligence, and intelligence in general, are closely related to vocabulary (Pearson, Hiebert, & Kamil, 2007; see also chapter 2, Theory and Background). Vocabulary tests have long been included in tests of intelligence; they are also still widely published and demonstrate a high correlation with measures of comprehension (Pearson, Hiebart, & Kamil, 2007). One such measure of verbal ability is the Verbal Comprehension Index (VCI) of the Wechsler Intelligence Scale for Children (WISC). To establish evidence for the convergence of the construct of vocabulary knowledge, the associations between the WISC Verbal subtests and the Ortiz PVAT were evaluated within a clinical sample. Participants in this study took the Ortiz PVAT and their clinicians reported the participants’ VCI score from either the WISC-IV (Wechsler, 2003) or the WISC-V (Wechsler, 2014; both referred to as WISC VCI henceforth). Participants met the same criteria as individuals in the English Speaker normative sample with regard to their language experiences. A description of the sample (N = 70) can be found in Table 8.17.

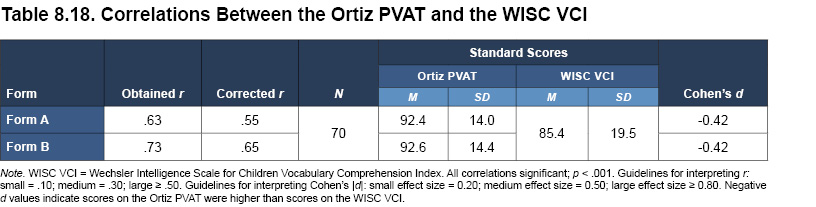

Although the two assessments measure aspects of verbal ability, they are both distinct in their features and design. The Ortiz PVAT has a narrower focus on receptive vocabulary, while the WISC VCI covers broader topics of verbal functioning, with subtests for word reasoning, comprehension, and expressive vocabulary (note that the scoring of these three subtests varies slightly between editions of the WISC; see Wechsler, 2014). In light of these distinctions, the two measures were expected to be associated with one another, but only to a moderate degree.

Standard scores on the two assessments were correlated with Pearson’s correlation coefficient, and the results, along with corrected correlations, are presented in Table 8.18. For both Form A and Form B, the correlations were large, positive, and statistically significant (corrected r ranges from .55 to .65; all p < .001). Table 8.18 also displays the means and standard deviations of the two assessments, as well as their standardized mean differences (Cohen’s d effect size ratios). While there were moderate differences between Ortiz PVAT scores and WISC VCI scores (Cohen’s d = -0.42 for both forms), these differences could be explained by the additional content areas assessed by the WISC VCI (e.g., word reasoning and comprehension). These findings indicate that although the WISC VCI and Ortiz PVAT both include measures of vocabulary, the WISC VCI score also reflects content areas that are not captured by the Ortiz PVAT.

| << Reliability | Fairness >> |